- Consulting services

Data Quality & MDM : 4-Wk Pilot implementation

Data Quality & MDM : 4-Wk Pilot implementation

Tredence Inc

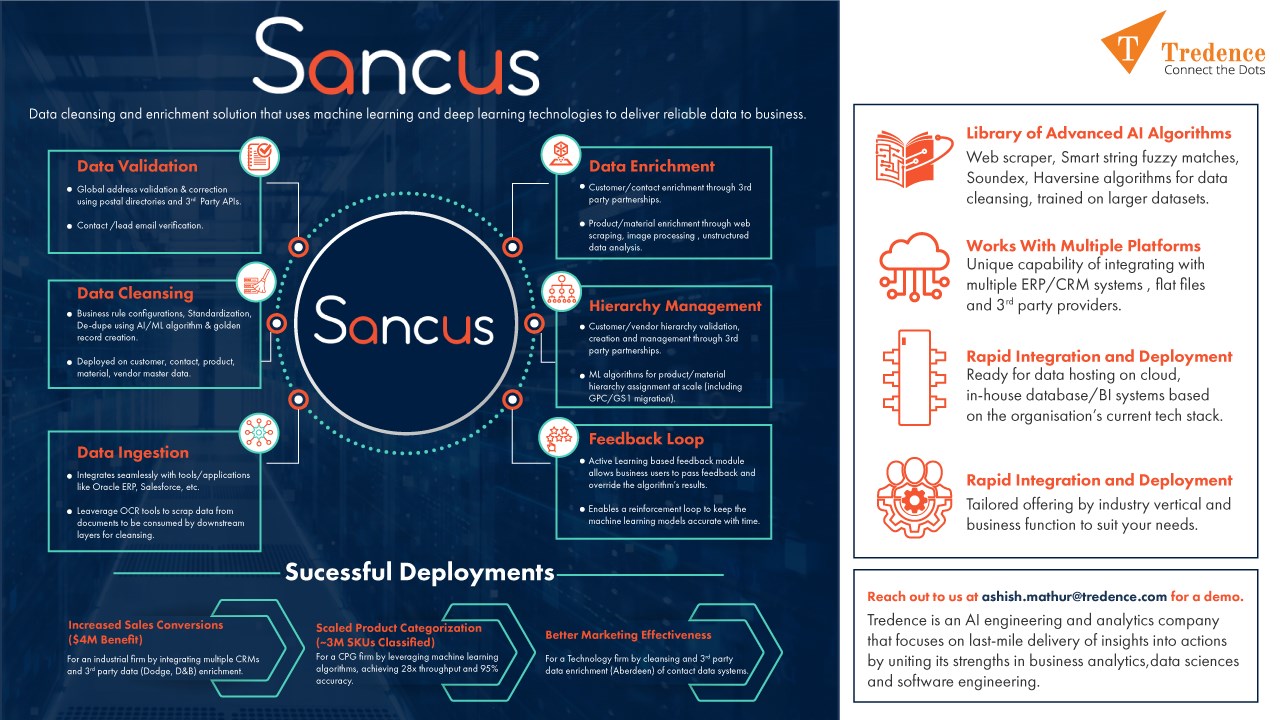

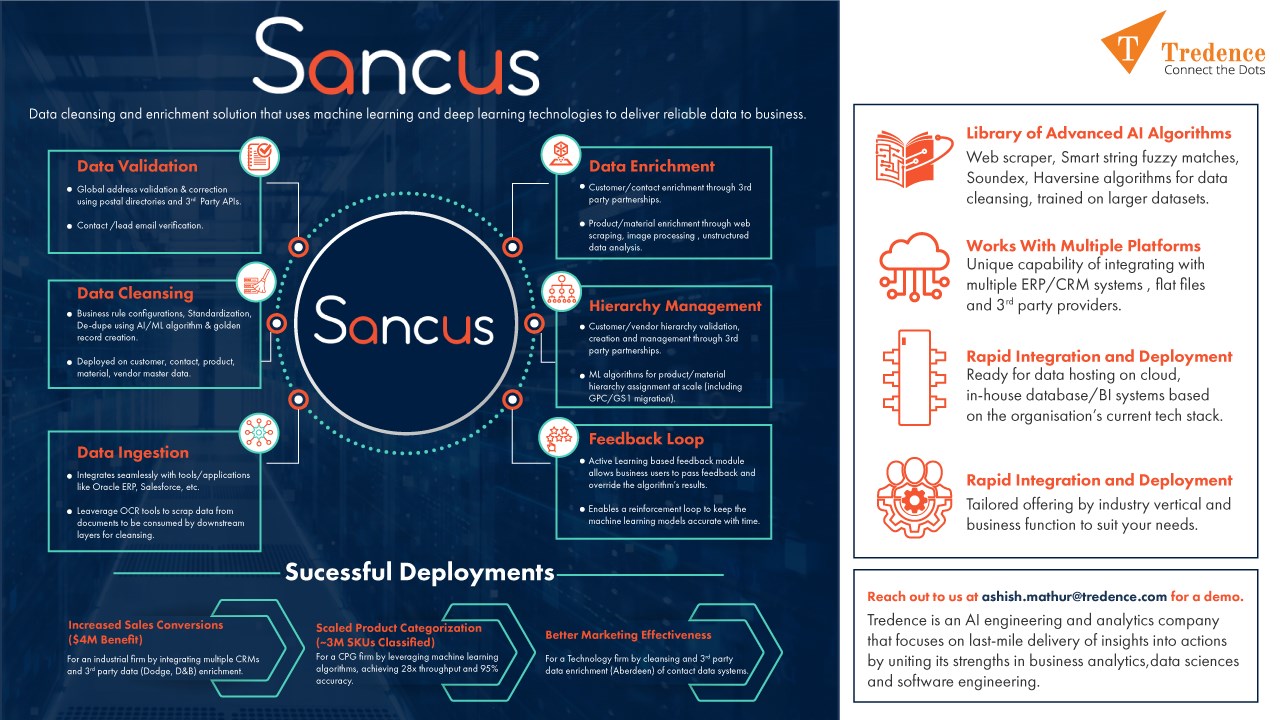

A data cleansing & enrichment solution that uses ML to deliver reliable data to business by removing duplicates and improving quality of customer, contact & vendor data to enable downstream analytics

A data cleansing & enrichment solution that uses ML to deliver reliable data to business by removing duplicates and improving quality of customer, contact & vendor data to enable downstream analytics

Objective: Cleanse and consolidate Customer, Vendor and Contract master data using our ML model that are easily scalable across markets/regions to enhance the quality of derived Business Insights.

Key Challenges Addressed:

- Duplicate entries for Customer or Contact data

- Multiple records that with partial information that reference the same customer / contact

- Inconsistent values across different sources for the same customer/contact record.

- Existing de-dup and cleanse process is extremely time consuming.

- Existing process is purely rule based that does not scale

How do we address your challenges:

- A combination of ML based algorithm for data cleansing and de-dup coupled with a rule based approach to address corner cases

- Ability to enrich the data through API hooks

- Active learning feedback to improve data matching performance

- A centralized Data Quality dashboard to monitor source data quality metrics

- Integration with CRM systems like Salesforce for completing the feedback loop to the source system

Pilot Outcome:

- Consolidated customer and contact master data after removal of duplicates

- Enhanced Customer, Contact and Vendor data.

Implementation Plan: The break-up of the implementation plan is as below:

- Week 1: Spent on ‘Discovery’ to understand the business and data. ‘Setup’ Sancus in the client environment and ‘Run’ the default algorithm without any modifications.

- Week 2-3: Review intermediate output and modify the ML algorithm as per the nuances of the given data.

- Week 4: Review final output, measure accuracy, create golden record & share results.

The solution is built on native Azure components to intelligently scale using below key components:

- Azure Storage Account ([ADLS/Blob]): Storage account to store Input, Intermediate files, and consolidated Output.

- Azure DataBricks: Azure Databricks enables the Sancus algorithm to easily scale for large data volumes.